A new release for Zeebe and Operate is available now, version 0.24.2. You can grab the releases via the usual channels:

As usual, if you’d like to get started immediately, you can find information about it directly on the Zeebe & Operate documentation website.

Both Zeebe 0.24.1 and Operate 0.24.2 are fully backwards compatible with previous versions. For Camunda Cloud users, 0.24.1 is already the latest production version, meaning by the time you read this post, your existing clusters should have been upgraded.

Here are some highlights:

- Zeebe

- Many, many bug fixes

- Standalone gateway liveness probes

- Docker distribution can now run as non-root user

- Job worker metrics in the Go client

- FEEL expression support for message names

- Operate

- Flexible data schema migration

- Improved handling of unnamed activities

In the rest of this post, we’ll go into more details about the changes that the latest stable releases bring.

What happened to the 0.24.0?

A breaking change was introduced in 0.24.0 and detected late in the release cycle which prevents users from performing a rolling upgrade. It is a change in the broker messaging protocol, which results in brokers running different versions of Zeebe being unable to talk to each other. This breaks the standard Kubernetes rolling upgrade, where one node is upgraded, then once it is ready the next one is. As the initial node never becomes ready the upgrade stalls forever.

In order to preserve backwards compatibility between 0.23.x and 0.24.x, 0.24.1 nodes cannot speak to 0.24.0 nodes, so it is recommended to upgrade directly from 0.23.x to 0.24.1 and skip 0.24.0 altogether.

Zeebe 0.24.1

As seen from the highlights, this release was mostly focused on stability than anything else, with the bulk of the development work going towards bug fixes,

most of which have already been released over the last 3 months as part of patch releases for 0.23.x and 0.22.x.

Workflow engine improvements

Initialize multi-instance outputElement to local scope

Multi-instance activities can define their outputElement using a FEEL expression, which gets resolved at runtime. If it is resolved to a single variable, or a nested property of a variable, it could previously be overwritten by different iterations over the same multi-instance activity, as each instance would effectively see the same variable. To avoid potential causes of errors, the outputElement is now nil initialized to the local scope of the instance.

Define message names using FEEL

Continuing on the work of the previous release, message names can now also be defined using FEEL expressions. This lets you resolve the message name at runtime, where it was previously only possible to assign it a static value at design time.

Possible race conditions when interrupting parallel executions

Zeebe supports parallel execution of workflow instance elements via a few mechanisms – parallel multi-instance elements, non-interrupting boundary events or event sub-processes, and parallel fork and join gateways. We found two issues where cancelling a workflow instance waiting on a parallel join gateway (#4352 and #4400) would result in frozen instances which were unrecoverable, both of which have now been fixed.

Docker distribution improvements

One of the main issue with the previous Docker distribution was that it expected to run as root. While that was useful in development, it prevented users from running it in certain restricted or untrusted environments, notably Openshift. Thanks to use @coolpalani, the official Zeebe docker distribution can now be ran under UID 1000. A few of other minor improvements turned the build process in a multi-stage Dockerfile, reducing the compressed size of the image by a little under 50%.

Cluster membership improvements

Cluster membership in Zeebe is determined via gossip – more specifically, an implementation of the SWIM algorithm.

Fix startup issues

While investigating issues with clusters that failed to start, the team tracked down the root cause of these to out of memory and garbage collection issues stemming from our SWIM protocol implementation.

Several improvements were implemented as a result, improving resource usage and fixing start up issues.

Exposed gossip configuration

While SWIM is by design scalable, it can be useful to tune it for your particular use case. By default, it is tuned to minimize the amount of network traffic while maintaining a relatively fast failure detection. In certain cases, however, it may be better to improve failure detection at the cost of more network chatter.

With this release, you can now fully configure our cluster membership protocol, letting you tune cluster membership for your own use case. For example, on small clusters where fast failure detection is desirable, broadcasting updates may be a good option.

Ensure eventual consistency of cluster view

Gossip is by design eventually consistent, and as such it sometimes take time for members to have the same logically consistent view of the cluster. What we found is gossiped updates were sometimes missed by certain members, resulting in clusters which were possibly never consistent, which we now fixed to ensure eventual consistency.

Improved log consistency check

Zeebe uses a replicated append-only log as its storage mechanism and as the backbone of its consensus module. As such, it’s critical that the log remain consistent. One critical property of the append only log is that it is totally ordered – the record position is a strict monotonic sequence. Previously, the position computation was in part influenced by how much had been written to the log so far – to some extent, user input. This made it generally unpredictable, and made detecting gaps or inconsistencies more difficult. This has been rewritten such that positions follow a simple, predictable sequence, allowing us to better detect breaks in monotonicity before appending an entry. Doing this before is critical to prevent a leader from poisoning its own log and that of its followers.

Standalone gateway liveness probes

Up until now, there was no official way to detect if a gateway had failed. Failure detection was partially done via gossip, but for certain bugs (such as topology bugs), the only way to reliably figure out a gateway was bricked was to send requests and count the failure rate.

There is now an official health check, along with a series of health probes, which let you monitor the liveness of your standalone gateway. These can be used to know when to spawn a new a gateway and drop the old, or stop sending traffic to a misbehaving gateway. You can read more about it here.

Golang job worker metrics

Using the zbc golang client you can create JobWorker instances, which take care of polling for jobs and let you focus only on handling them. One issue with this was that it was previously a black box, with no way to know how many jobs had been activated.

You can now define a metrics consumer for your job workers. For now, there is only one metric available – the remaining job count. This allows you to better monitor your workers and tune their configuration, as well as potentially provide information for an auto scaling metric. User AndreasBiber was kind enough to implement this new feature for the Zeebe Go client, and there are plans to port this for the Java client as well.

Incorrect gateway topology

The gateway in Zeebe is primarily in charge of routing incoming gRPC requests to the appropriate broker – that is, the leader of the partition for a given request. If, however, it ends up with the wrong topology, then it will send requests to the wrong broker, which will reject it. From the client perspective, this is equivalent as the leader being UNAVAILABLE.

To ensure the topology remains consistent even when using gossip for failure detection, we attach the Raft term to the updates – that is, we rely on the consensus module to sequence the topology updates. Unfortunately there was a bug where nodes marked as dead were removed from the topology, but the last term was kept around to sequence. However, if the dead node came back quickly with the same term (e.g. short network partition), that update would be ignored as it was considered out of order. This resulted in an incorrect topology and partitions being incorrectly detected as UNAVAILABLE.

Snapshotting improvements

Zeebe uses a replicated, ordered, append-only log as its main data store. This is however impractical when it comes to aggregating information on running workflow instances. During processing it will then create an hybrid in-memory/on-disk projection of its log – in a sense, an aggregated representation of all the logged events, which represent the state changes. In order to prevent the log from growing forever, Zeebe will regularly take durable snapshots of this state, and compact its log, removing all events which represent state changes that occurred before the state represented by the snapshot.

As the snapshots are used during recovery, its imperative that they are made durable, and are correctly replicated. The team therefore introduced checksum validation during snapshot replication, and improved the durability of snapshots on creation as well as during replication.

Slow followers unable to take part in the consensus

Zeebe uses Raft as its consensus algorithm, which can tolerate up to floor(N/2) failures, where N is the number of nodes. So given a three nodes cluster, two nodes should be able to join and achieve quorum, allowing the cluster to make progress overall. We found however that in some cases, where one follower was particularly slow, a leader was elected but could not achieve quorum, resulting in no progress being made and the cluster being unavailable.

You can read more about the fix here, which better illustrates the exact case and how it was solved.

Enhanced backpressure configuration

Handling back pressure in Zeebe is important in order to provide certain guarantees when it comes to performance. In Zeebe, this is implemented via different algorithms which control the flow of incoming commands in a broker, per partition. By default, it uses a windowed version of the Vegas algorithm to regulate flow and converge towards a consistent command processing time (i.e. what for the algorithm would be RTT). Under the hood, this is delegated to Netflix’s concurrency-limits library, which provides other algorithms.

In order to support a wider set of use cases, you can now configure the back pressure to use all supported algorithms, as well as tune their individual parameters – all of which is documented here. This will let you configure how flow is controlled in a partition based on your specific workload.

Operate 0.24.2

Flexible data schema migration

With the latest release of Operate 0.24.2 we introduced a new migration procedure, which is more flexible then the previous approach. The new approach

is similar to known migration tools for SQL databases like Flyway or Liquibase. Instead of

having a single migration step from on Operate version to another, we now ship multiple small migration steps for each required change in the data model.

This allows the migration tool in Operate to decide which migration steps are already applied and which still have to be executed. This is helpful for

migrations from different source versions, i.e. migrating from Operate 0.23.0 to 0.24.2 might require different steps then migrating from 0.23.3 to 0.24.2

as 0.23.3 already contains previous fixes. You can read more about this in our documentation.

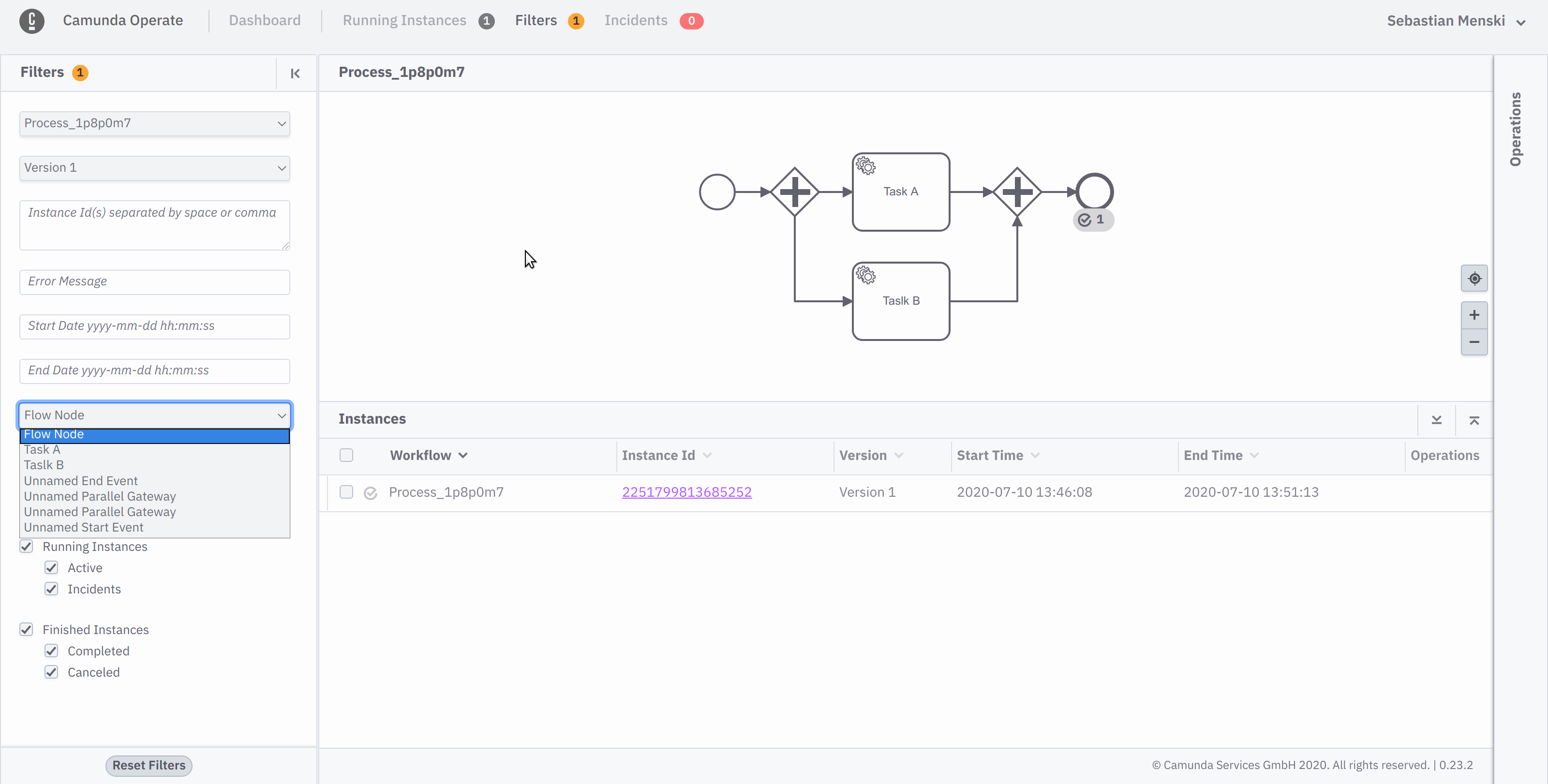

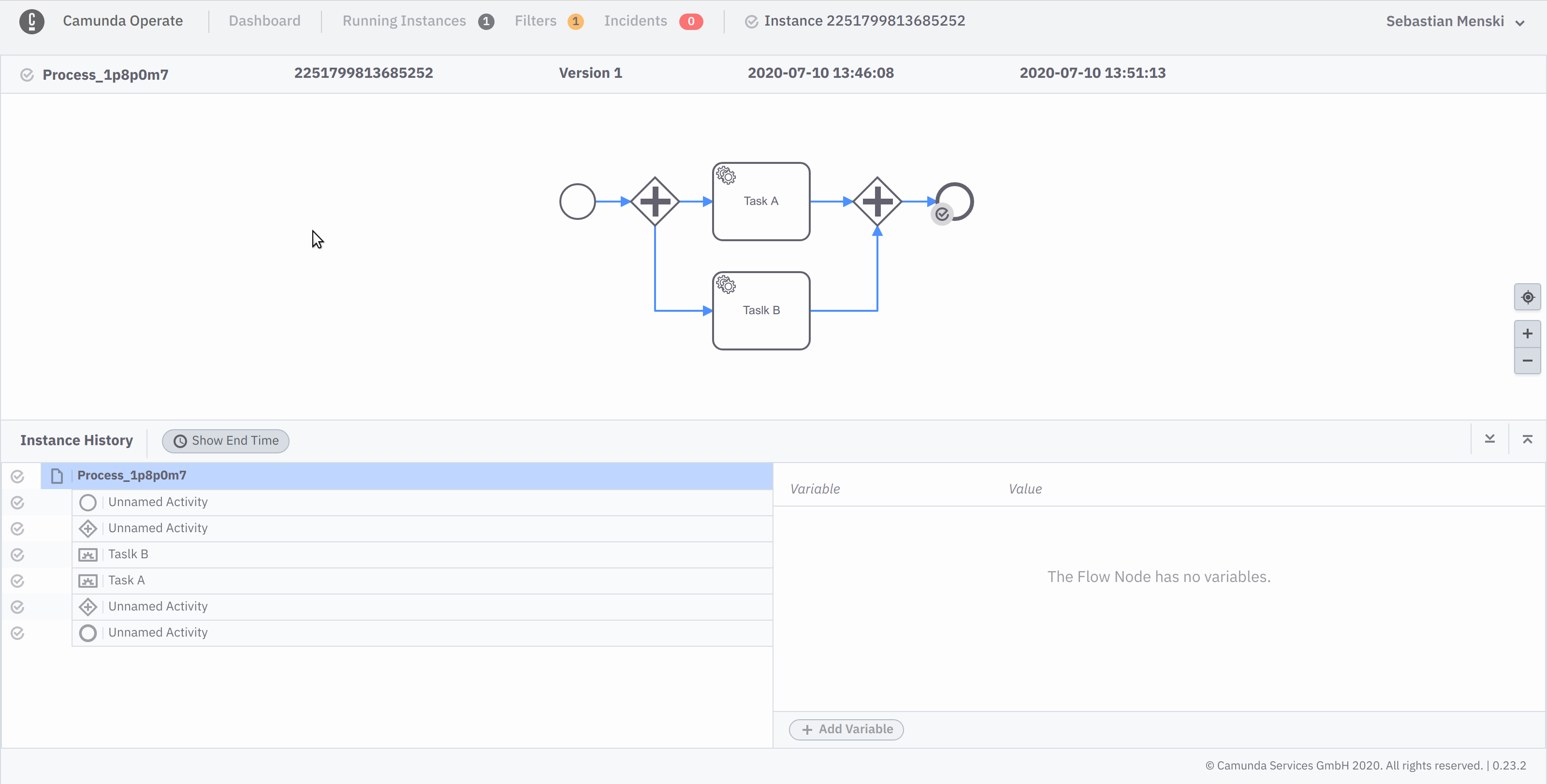

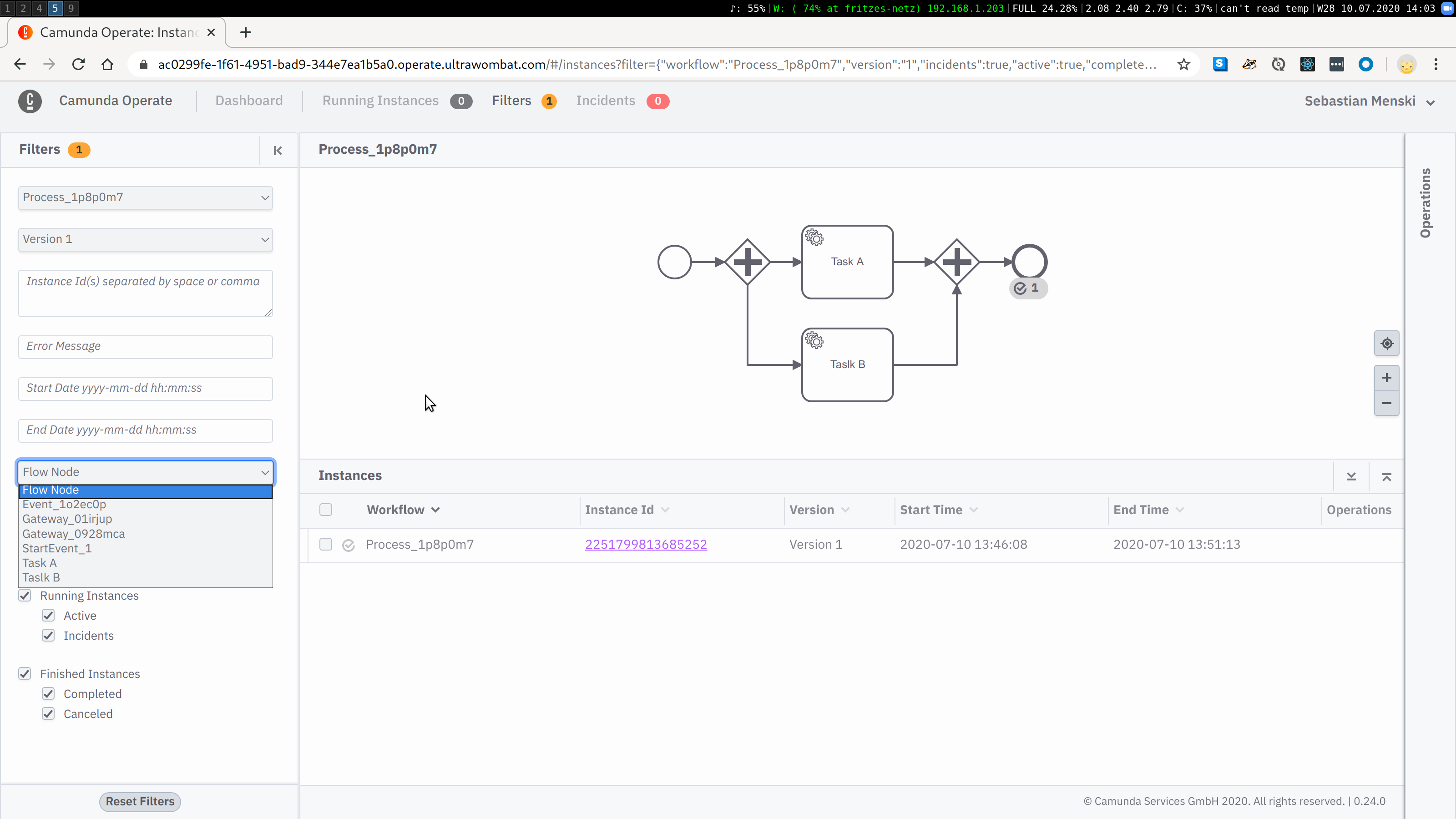

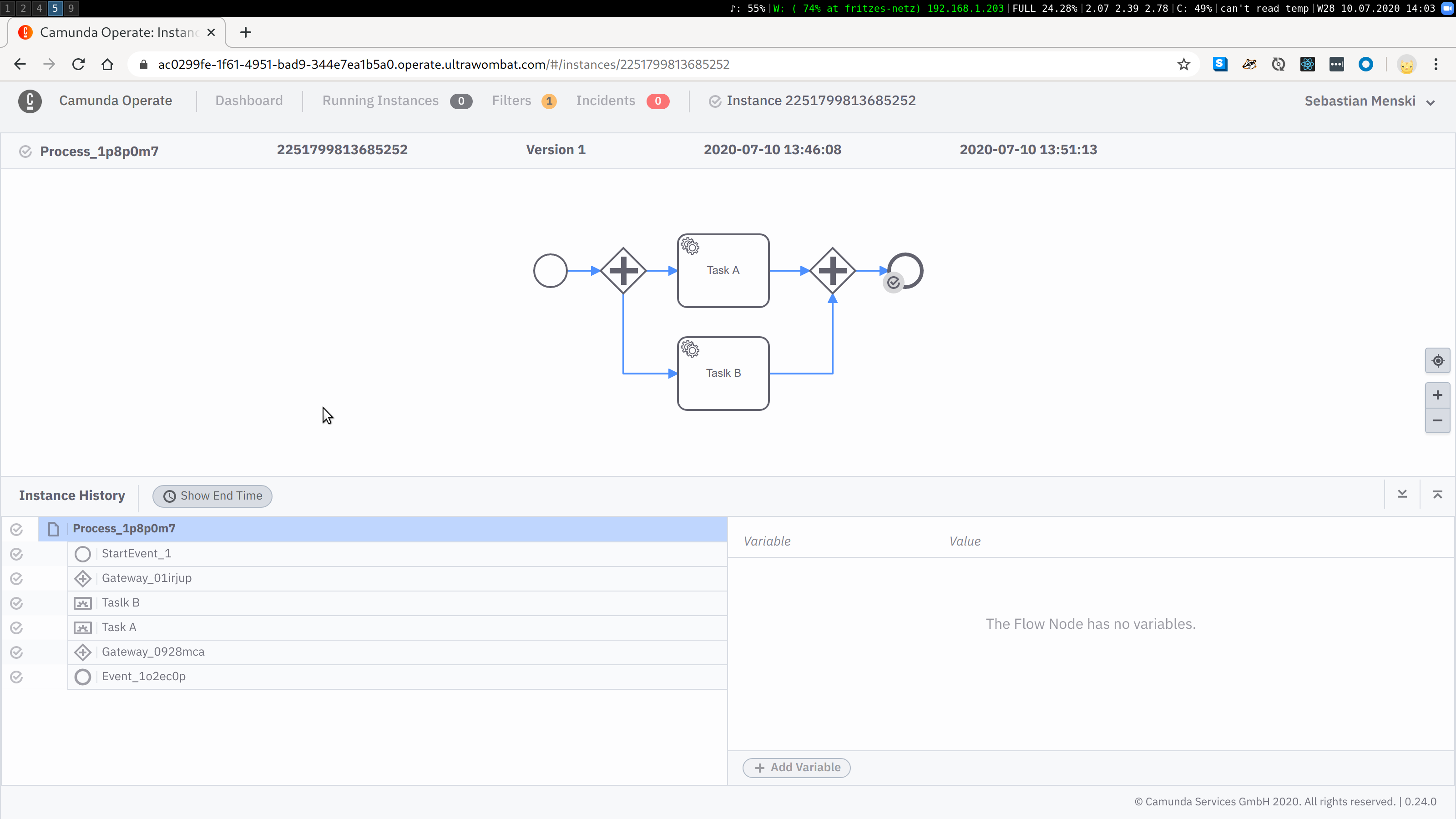

Improved handling of unnamed activities

In a BPMN model it is optional to give human readable names to activities, as they always have a technical unique identifier. As it’s common to give clear human readable names to

tasks and intermediate events, it might happen das a gateway or start/end event stays unnamed in the model.

The activities without names where shown in Operate with a general placeholder name, to indicate that they didn’t had a proper human readable name set, but as you can see on the following

screenshots this lead to the situation where it was unclear to the user which activity is referred to.

With Operate 0.24 the activities will now be listed with there unique technical identifier, this allows the user to easier correlate the shown information in Operate with the real BPMN model.

Get In Touch

There are a number of ways to get in touch with the Zeebe community to ask questions and give us feedback.

- Join the Zeebe user forum

- Join the Zeebe Slack community

- Reach out to dev advocate Josh Wulf on Twitter

- Reach out to dev advocate Mauricio Salatino on Twitter

We hope to hear from you!