*Camunda Platform 8, our cloud-native solution for process orchestration, launched in April 2022. Images and supporting documentation in this post may reflect an earlier version of our cloud and software solutions.

I’m a distributed systems engineer working on the Camunda Zeebe project, that’s part of Camunda Platform 8. I’m highly interested in SRE topics, so I started maintaining the Helm charts for Camunda Platform 8.

I’m excited to share below the detailed learnings and experiences I had along my journey of finding a sufficient way to write automated tests for Helm charts. At the end of this blog post, I’ll present to you the current solution we’re using, which is meeting all our requirements.

Please, be aware that these are my personal experiences and might be a bit subjective, but I try to be as objective as possible.

How it Began

We started with the community-maintained Helm charts for Zeebe and Camunda Platform 8-related tools, like Tasklist and Operate. This project had a lack of support and stability issues.

In the past, we often had issues with the charts being broken, sometimes because we added a new feature or property. Or because the property was never used before, and was hidden by a condition. We wanted to avoid that and give the users a better experience.

In early 2022, we at Camunda wanted to create some new Helm charts, based on the old ones we had. The new Helm charts needed to be officially supported by Camunda. In order to do that with a clear conscience, we wanted to add some automated tests to the charts.

Prerequisites

In order to understand this blog post, you should have some knowledge of the following topics:

Helm Testing. What is the Issue?

Testing in the Helm world is, I would say, not as well evolved as it should be. Some tools exist, but they lack usability, or they needed too much boilerplate code. Sometimes it’s not really clear how to use or write them.

Some posts around that topic already exist, but there aren’t many. For example:

This one really helped us, Automated Testing for Kubernetes and Helm Charts using Terratest.

It explains how to test Helm charts with Terratest, a framework to write tests for Helm charts, and other Kubernetes-related things.

We did a comparison of Terratest, writing golden file tests (here’s a blog post about that), and using Chart Testing (CT) . You can find the details in this GitHub issue.

This issue contains a comparison between the test tools, as well as some subjective field reports, which I wrote during the testing. It helped me to make some decisions.

What and How to Test

First of all, we separated our tests into two parts, with different targets and goals:

- Template tests (unit tests) – Which verify the general structure.

- Integration tests – Which verify whether we can install the charts and use them.

Template tests

With the template tests, we want to verify the general structure. This includes whether it’s yaml conform, has the right values set, has changing default values, or if they’re set at all.

For template tests, we combine both golden files and Terratest. Generally speaking, golden files store the expected output of a certain command or response for a specific request. In our case, the golden files contain the rendered manifest, which are outputted after you run `helm template`. This allows you to verify that the default values are set and changed only in a controlled manner, this reduces the burden of writing many tests.

If we want to verify specific properties (or conditions), we can use the direct property tests. We will come to that again later.

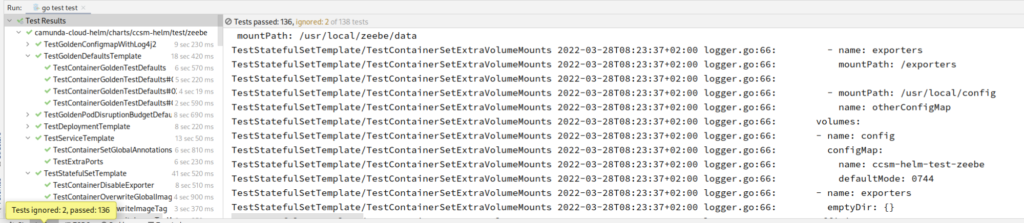

This allows us to use one tool (Terratest) and separate the tests per manifest, such as a test for Zeebe statefulset, the Zeebe gateway deployment, etc. The tests can be easily run via command line or IDE, and CI.

Integration tests

With the integration tests we want to test for two things:

- Whether the charts can be deployed to Kubernetes, and are accepted by the K8s API.

- Whether the services are running and can work with each other.

Other things, like broken templates, incorrectly set values, etc., are caught by the tests above.

So to turn it around, here are potential failure cases we can find with such tests:

- Specifications that are in the wrong place (look like valid yaml), but aren’t accepted by the K8s API.

- Services that aren’t becoming ready because of configuration errors, and they can’t reach each other.

The first case we could also solve with other tools, which validates manifests based on the K8s API, but not the second one.

In order to write the integration tests, we tried out the Chart Testing tool and Terratest. We chose Terratest over Chart Testing. If you want to know why, read the next section. Otherwise, you can simply skip it.

Chart testing

While trying to write the tests using Chart Testing, we encountered several issues that made the tool difficult to use, and the tests difficult to maintain.

For example, the options to configure the testing process seem rather limited – see CT Install documentation for available options. In particular, during the Helm install phase, our tests deploy a lot of components (Elasticsearch, Zeebe) that take ages to become ready. However, Chart Testing times out by default after three minutes, and we didn’t find a way to adjust this type of setting. As such, we actually were never able to run a successful test using the `ct` CLI.

Another painful point was the way the tests are shipped, executed, and eventually how results are reported. The Chart Testing tool wraps, simply speaking, the Helm CLI will run the `helm install` and `helm test` command. To be executed using the `helm test` command, the tests have to be configured and deployed as part of the Helm chart. This means the tests have to be embedded inside a Docker image, which might not be super practical, and the Helm chart also needs to be modified to ship with the additional tests settings.

If the tests fail in the CI and you want to reproduce it, you would need the `ct` CLI locally, and run `ct install` to redeploy the whole Helm chart, and run the tests. When the tests fail, the complete logs of all the containers are printed, which can be a big amount of data to inspect. We found it was difficult to iterate on the tests, and quite cumbersome to debug them when they were failing.

All the reasons above pushed us to use Terratest (see next section) to write the tests. The benefit here is that we have one tool for both (unit and IT tests), and more control over it. It makes it easy to run and debug the tests. In general, the tests were also quite simple to write, and the failures were easy to understand.

For more information regarding this, please check the comments in the GitHub issue.

Helm Chart Tests In Practice

In the following section, I would like to present how we use Terratest, and what our new tests for our Helm charts look like.

Golden files test

We wrote a base test, which renders given Helm templates and compares them against golden files. The golden files can be generated via a separate flag. The golden files are tracked in git, which allows us to see changes easily via a git diff. This means if we change any defaults, we can directly see the resulting rendered manifests. These tests ensure that the Helm chart templates render correctly and the output of the templates changes in a controlled manner.

Golden Base

package golden

import (

"flag"

"io/ioutil"

"regexp"

"github.com/gruntwork-io/terratest/modules/helm"

"github.com/gruntwork-io/terratest/modules/k8s"

"github.com/stretchr/testify/suite"

)

var update = flag.Bool("update-golden", false, "update golden test output files")

type TemplateGoldenTest struct {

suite.Suite

ChartPath string

Release string

Namespace string

GoldenFileName string

Templates []string

SetValues map[string]string

}

func (s *TemplateGoldenTest) TestContainerGoldenTestDefaults() {

options := &helm.Options{

KubectlOptions: k8s.NewKubectlOptions("", "", s.Namespace),

SetValues: s.SetValues,

}

output := helm.RenderTemplate(s.T(), options, s.ChartPath, s.Release, s.Templates)

regex := regexp.MustCompile(`\s+helm.sh/chart:\s+.*`)

bytes := regex.ReplaceAll([]byte(output), []byte(""))

output = string(bytes)

goldenFile := "golden/" + s.GoldenFileName + ".golden.yaml"

if *update {

err := ioutil.WriteFile(goldenFile, bytes, 0644)

s.Require().NoError(err, "Golden file was not writable")

}

expected, err := ioutil.ReadFile(goldenFile)

// then

s.Require().NoError(err, "Golden file doesn't exist or was not readable")

s.Require().Equal(string(expected), output)

}The base test allows us to easily add/write new golden file tests for each of our sub charts. For example, we have the following test for the Zeebe sub-chart:

package zeebe

import (

"path/filepath"

"strings"

"testing"

"camunda-cloud-helm/charts/ccsm-helm/test/golden"

"github.com/gruntwork-io/terratest/modules/random"

"github.com/stretchr/testify/require"

"github.com/stretchr/testify/suite"

)

func TestGoldenDefaultsTemplate(t *testing.T) {

t.Parallel()

chartPath, err := filepath.Abs("../../")

require.NoError(t, err)

templateNames := []string{"service", "serviceaccount", "statefulset", "configmap"}

for _, name := range templateNames {

suite.Run(t, &golden.TemplateGoldenTest{

ChartPath: chartPath,

Release: "ccsm-helm-test",

Namespace: "ccsm-helm-" + strings.ToLower(random.UniqueId()),

GoldenFileName: name,

Templates: []string{"charts/zeebe/templates/" + name + ".yaml"},

})

}

}Here, we test the Zeebe resources: service, serviceaccount, statefulset, and confimap with default values against golden values. Here are the golden files.

Property test:

As described above, sometimes, we want to test specific properties, like conditions in our templates. Here it’s easier to write specific Terratest tests.

We do that for each manifest, like the statefulset, and then call it `statefulset_test.go`.

In such go test file, we have a base structure, which looks like this:

type statefulSetTest struct {

suite.Suite

chartPath string

release string

namespace string

templates []string

}

func TestStatefulSetTemplate(t *testing.T) {

t.Parallel()

chartPath, err := filepath.Abs("../../")

require.NoError(t, err)

suite.Run(t, &statefulSetTest{

chartPath: chartPath,

release: "ccsm-helm-test",

namespace: "ccsm-helm-" + strings.ToLower(random.UniqueId()),

templates: []string{"charts/zeebe/templates/statefulset.yaml"},

})

}If we want to test a condition in our templates, which look like this:

spec:

{{- if .Values.priorityClassName }}

priorityClassName: {{ .Values.priorityClassName | quote }}

{{- end }}Then, we can easily add such tests to the `statefulset_test.go` file. That would look like this:

func (s *statefulSetTest) TestContainerSetPriorityClassName() {

// given

options := &helm.Options{

SetValues: map[string]string{

"zeebe.priorityClassName": "PRIO",

},

KubectlOptions: k8s.NewKubectlOptions("", "", s.namespace),

}

// when

output := helm.RenderTemplate(s.T(), options, s.chartPath, s.release, s.templates)

var statefulSet v1.StatefulSet

helm.UnmarshalK8SYaml(s.T(), output, &statefulSet)

// then

s.Require().Equal("PRIO", statefulSet.Spec.Template.Spec.PriorityClassName)

}In this test, we set the priorityClassName to a custom value like “PRIO”, render the template, and verify that the object (statefulset) contains that value.

Integration test

Terratest allows us to write not only template tests, but also real integration tests. This means we can access a Kubernetes cluster, create namespaces, install the Helm chart, and verify certain properties.

I’ll only present the basic setup here, since otherwise, it would go too far. If you’re interested in what our integration test looks like, check this out. Here we set up the namespaces, install the Helm charts, and test each service we deploy.

Basic Setup:

//go:build integration

// +build integration

package integration

import (

"os"

"path/filepath"

"strings"

"time"

"testing"

"github.com/gruntwork-io/terratest/modules/helm"

"github.com/gruntwork-io/terratest/modules/k8s"

"github.com/gruntwork-io/terratest/modules/random"

"github.com/stretchr/testify/require"

"github.com/stretchr/testify/suite"

v1 "k8s.io/apimachinery/pkg/apis/meta/v1"

)

type integrationTest struct {

suite.Suite

chartPath string

release string

namespace string

kubeOptions *k8s.KubectlOptions

}

func TestIntegration(t *testing.T) {

chartPath, err := filepath.Abs("../../")

require.NoError(t, err)

namespace := createNamespaceName()

kubeOptions := k8s.NewKubectlOptions("<KUBERNETES_CLUSTER_NAME>", "", namespace)

suite.Run(t, &integrationTest{

chartPath: chartPath,

release: "zeebe-cluster-helm-it",

namespace: namespace,

kubeOptions: kubeOptions,

})

}Similar to the properties test above, we have some base structure that allows us to write the integration tests. This is to set up the test environment. It allows us to specify the targeting Kubernetes cluster via kubeOptions.

In order to separate the integration tests from the normal unit tests, we use go build tags. The first lines above, define the tag `integration`, which allows us to run the tests only via `go test -tags integration ./…/integration`.

We create the Kubernetes namespace name either randomly (using a helper from Terratest) or based on the git commit if triggered as a GitHub action. We’ll get to that later.

func truncateString(str string, num int) string {

shortenStr := str

if len(str) > num {

shortenStr = str[0:num]

}

return shortenStr

}

func createNamespaceName() string {

// if triggered by a github action the environment variable is set

// we use it to better identify the test

commitSHA, exist := os.LookupEnv("GITHUB_SHA")

namespace := "ccsm-helm-"

if !exist {

namespace += strings.ToLower(random.UniqueId())

} else {

namespace += commitSHA

}

// max namespace length is 63 characters

// https://kubernetes.io/docs/concepts/overview/working-with-objects/names/#dns-label-names

return truncateString(namespace, 63)

}Go testify suite allows us to run functions before and after a test, which we use to create and delete a namespace.

func (s *integrationTest) SetupTest() {

k8s.CreateNamespace(s.T(), s.kubeOptions, s.namespace)

}

func (s *integrationTest) TearDownTest() {

k8s.DeleteNamespace(s.T(), s.kubeOptions, s.namespace)

}The example integration test is fairly simple, we install the Helm charts with default values, and wait until all pods are available. For that, we can use some helpers, which Terratest offers here for example.

func (s *integrationTest) TestServicesEnd2End() {

// given

options := &helm.Options{

KubectlOptions: s.kubeOptions,

}

// when

helm.Install(s.T(), options, s.chartPath, s.release)

// then

// await that all ccsm related pods become ready

pods := k8s.ListPods(s.T(), s.kubeOptions, v1.ListOptions{LabelSelector: "app=camunda-cloud-self-managed"})

for _, pod := range pods {

k8s.WaitUntilPodAvailable(s.T(), s.kubeOptions, pod.Name, 10, 10*time.Second)

}

}As written above, our actual integration test is far more complex, but this should give you a good idea of what you can do. Since Terratest is written in go, this allowed us to write all our tests in go, use mechanics like build tags, and use go libraries like testify. Terratest makes it easy to access the Kubernetes API, run Helm commands like `install`, and validate the outcome. I really appreciate the verbosity, since the rendered Helm templates are also printed to standard out on running the tests, which helps to debug them. After implementing the integration tests, we were quite satisfied with the result, and the test coding approach, which stands in contrast to having a separate abstraction around the tests that you would have with the Chart Testing tool.

After creating such integration tests, we, of course, wanted to automate them. We did that with GitHub actions (see next section).

Automation

As written above, we automate our tests via GitHub Actions. For normal tests, this is quite simple. You can find an example here of how we run our normal template tests.

It becomes more appealing for integration tests, where you want to connect to an external Kubernetes cluster. Since we use GKE, we also use the corresponding GitHub actions to authenticate with Google Cloud, and get the credentials.

Follow this guide to set up the needed workload identity federation. This is the recommended way to authenticate with Google Cloud resources from outside and replace the old usage of service account keys. The workflow identity federation lets you access resources directly, using a short-lived access token, and eliminates the maintenance, and security burden associated with service account keys.

After setting up the workload identity federation, the usage in GitHub actions is fairly simple.

As an example, we use the following in our GitHub action:

# Add "id-token" with the intended permissions.

permissions:

contents: 'read'

id-token: 'write'

steps:

- uses: actions/checkout@v3

- id: 'auth'

name: 'Authenticate to Google Cloud'

uses: 'google-github-actions/auth@v0'

with:

workload_identity_provider: '<Workload Identity Provider resource name>'

service_account: '<service-account-name>@<project-id>.iam.gserviceaccount.com'

- id: 'get-credentials'

name: 'Get GKE credentials'

uses: 'google-github-actions/get-gke-credentials@v0'

with:

cluster_name: '<cluster-name>'

location: 'europe-west1-b'

# The KUBECONFIG env var is automatically exported and picked up by kubectl.

- id: 'check-credentials'

name: 'Check credentials'

run: 'kubectl auth can-i create deployment'This is based on the examples of google-github-actions/auth and google-github-actions/get-gke-credentials. Checking the credentials is the last step to verify whether we have enough permissions to create a deployment, which is necessary for our integration tests.

After this, you just need to install Helm and go into your GitHub action container. In order to run the integration test, you can execute the go test with the integration build tag (described above). We use a Makefile for that. Take a look at the full GitHub action.

Last Words

We are now quite satisfied with the new approach and tests. Writing such tests has allowed us to detect several issues in our Helm charts, which is quite rewarding. It’s fun to write and execute them (the template tests are quite fast), and it always gives us good feedback.

Side note: what I really like about Terratest, is not only the functionality and how easy it is to write the test, but also it’s verbosity. On each run, the complete template is printed, which is quite helpful. In addition, it’s clear where the issue is with an error.

I hope to help you with this knowledge and the examples above. Feel free to contact me or tweet me if you have any thoughts to share or better ideas on how to test Helm charts. 🙂