Over the years, I’ve almost always used my time at conferences to build something fun. If Camunda has a booth, you’ll find me there, building some applications while I share my screen for people walking by. If they’re interested in whatever project I’m building, they may ask about it, and I will use the opportunity to get their feedback on the project and on Camunda as a platform. Sometimes, I implement the suggestions while we talk. It’s a lot of fun.

Recently, in Berlin, I was at the WeAreDevelopers conference, and I decided to spend my time there building a trivia game. I tested it out on folks who walked by, and they would suggest changes or additional features, which I added over the course of the event. It not only turned out to be a lot of fun, but I actually ended up creating a really interesting game, with features I wouldn’t have thought about were it not for the players themselves.

The first iteration

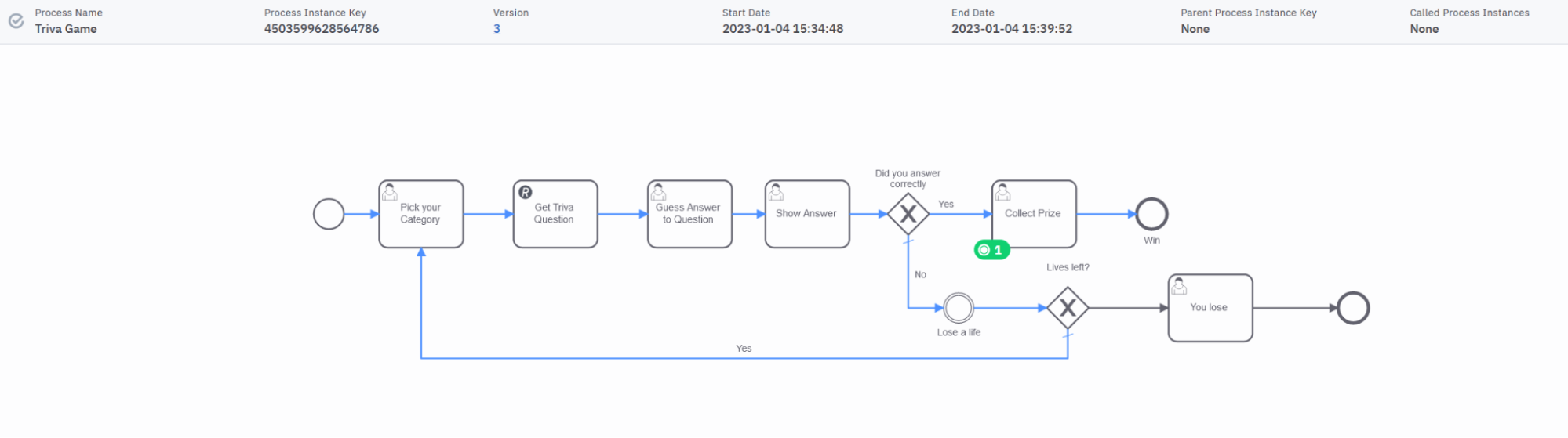

I arrived with a very basic model that I had actually built at another conference.

A user is asked to enter a category for the trivia question. Then, a REST Connector is used to query a service that returns a question and its answer. The user then sees the question, types in their answer, and it will show the user both the answer they gave and the answer that was provided by the service. If they’re correct, they win a prize; if not, they lose a life and can either try again or lose the game.

Automated the manual work

One of the first things someone pointed out was that manually checking the answer was annoying. Initially, I did this because people might misspell the answer or it might be close enough to be considered a correct answer. Either way, it was going to be hard to automate that. However, I gave it some thought and figured it might be easy to just ask ChatGPT to tell me if the answer is correct or not. I would simply provide it with the question and the guess and see what it says.

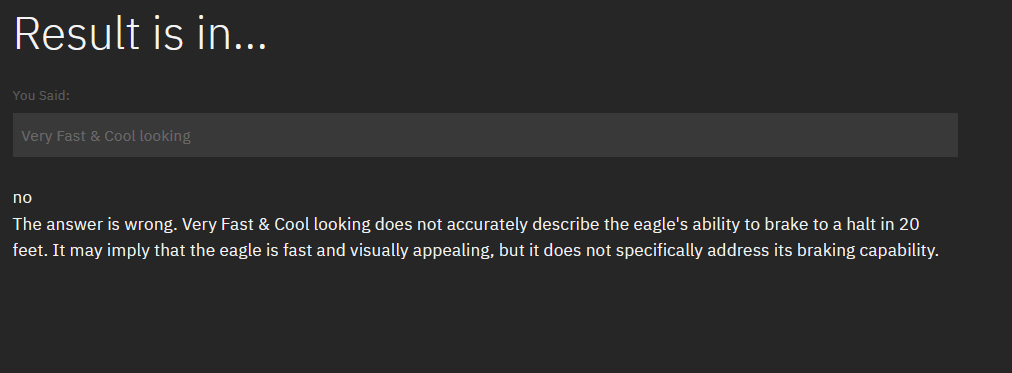

I used the OpenAI connector and added the prompt:

"If I were asked " + question + ", would " + guess + " be correct? Answer yes or no in lowercase, and also provide a brief reason why you think it's right or wrong."ChatGPT gives me back two important pieces of information. The first is simply letting me know if the answer is correct. I’ll be using that data to route the process accordingly. I also get another piece of info. If the guess is wrong, the guesser can find out why exactly they’re wrong! Because every moment can be a learning moment.

For example, I was asked to name the species of eagle that can go really fast and brake very quickly. Unfortunately, my answer was not accepted by ChatGPT, but at least I was given a reason.

In this case, myself and ChatGPT will need to agree to disagree.

Making it more fun to play

In most trivia games if you don’t know the answer, that’s it; you’ve lost, and the game stops being fun. I was inspired by a conference attendee, Ajay, to change this part of the process. Ajay was sure he could guess the answer to his question if only it was in his native language. Another booth visitor simply requested a hint of some kind for a question they were sure they knew the answer to. So I had two new feature requests:

- Translate the question into another language.

- Generate a hint for the question being asked.

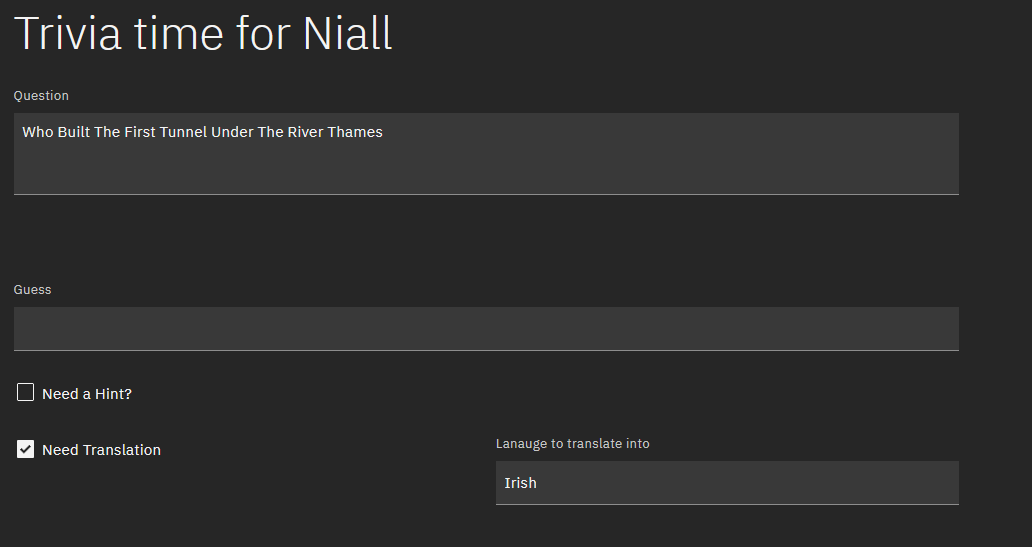

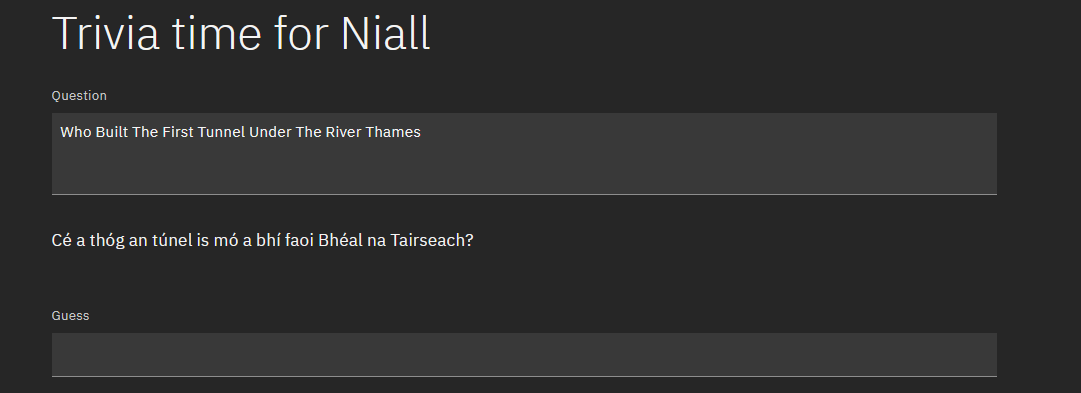

I had already used ChatGPT once, so why not again? The language one was pretty easy—just send the question along with the required language, and you’d get a translation. From a front-end perspective, this simply meant that I offered users a little translation option at the bottom of the form.

Then the form reappears with the translation appearing below the original question.

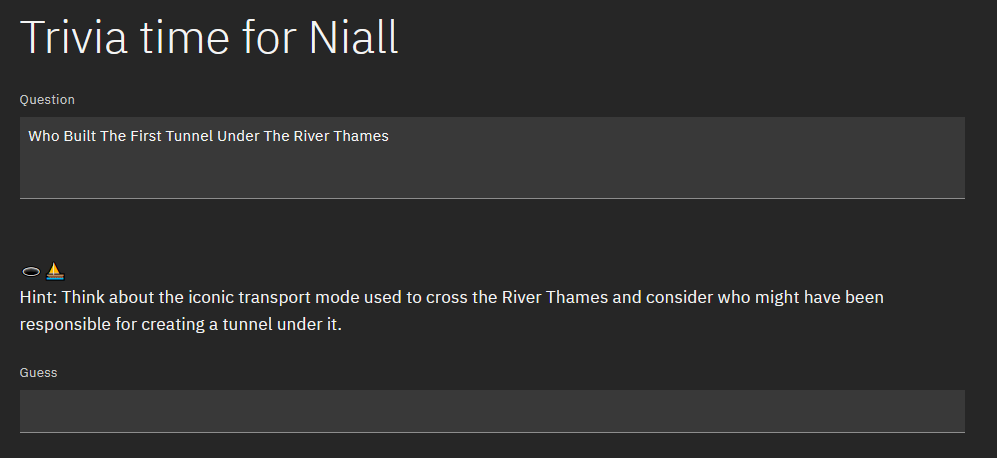

The hint was done in the same way—if a user needed a hint, they’d just tick the box and I would ask ChatGPT for a hint to the answer of the question. I also asked that emojis be included.

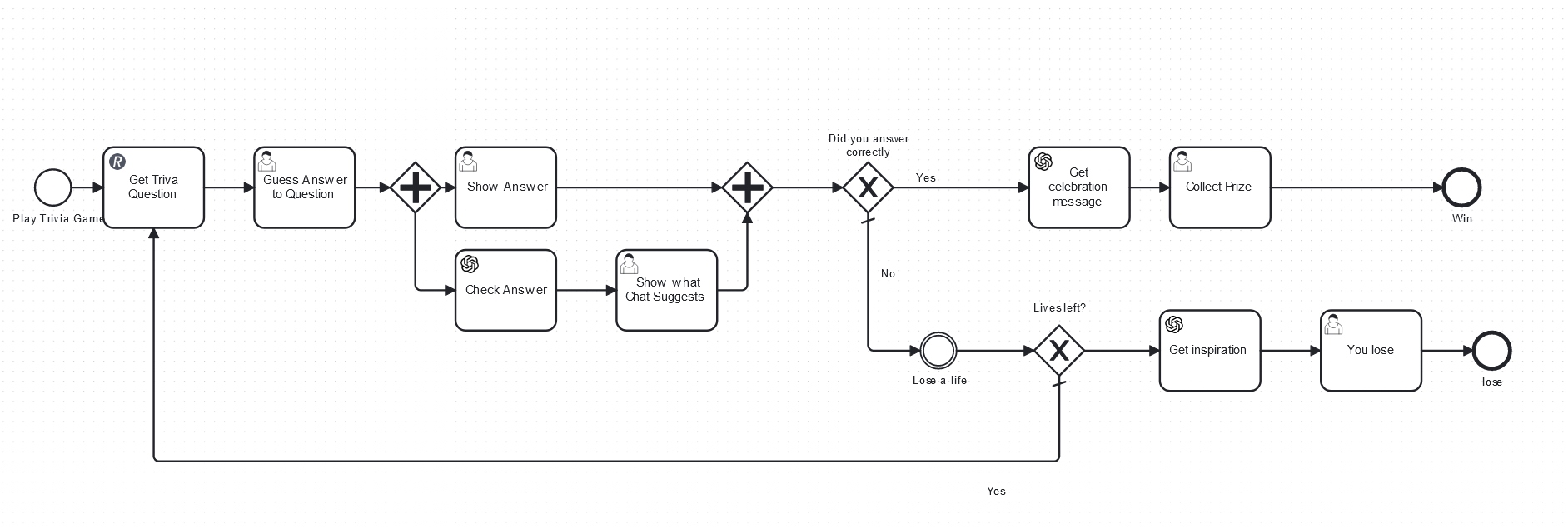

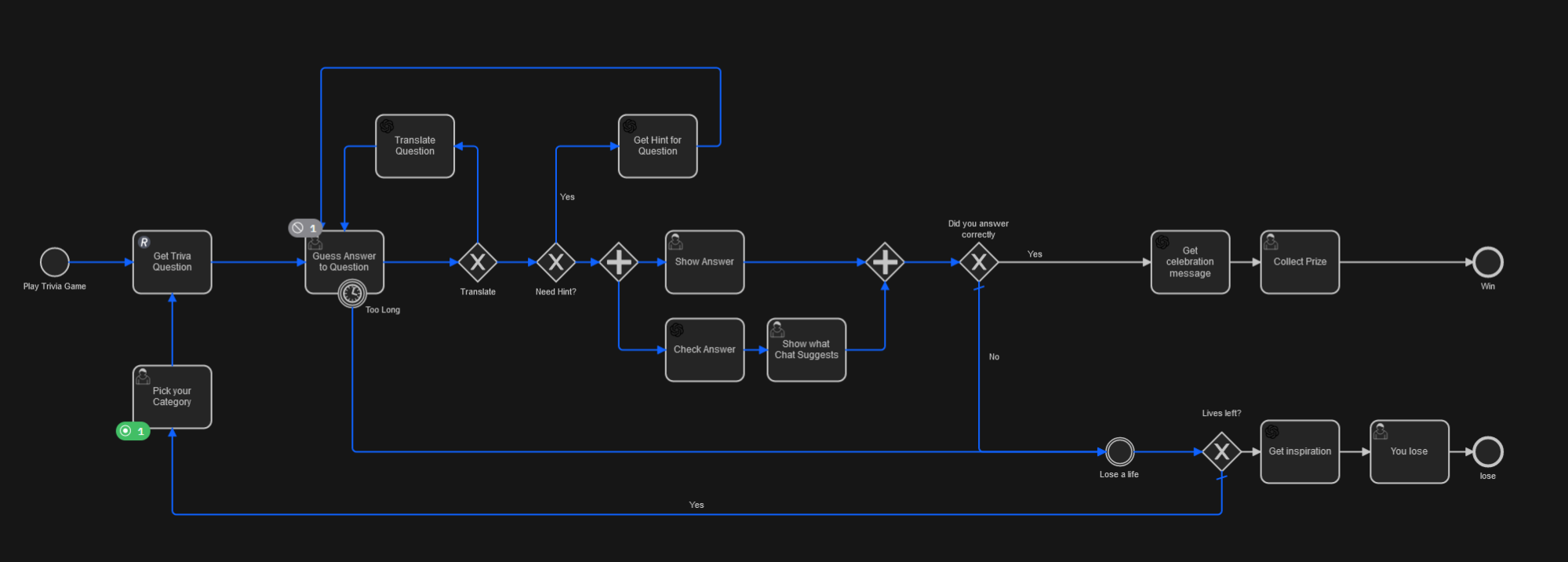

What’s also quite interesting is how this ended up looking from a BPMN perspective:

The model is built so people can ask for multiple hints or have it translated into different languages; only when they get bored or want to guess the answer does the process proceed to the most exciting part of any trivia game—finding out if you’re winning!

Final model

I continued to add smaller features as the conference went on, including a timer to make sure people answered faster, and generating some words of encouragement for folks who lost the game. In the end, the model looked like this:

Over the course of the conference we wound up with these stats:

🧑 91 people played the game

❓ 231 trivia questions were asked

🎉 56 winners

😭 26 losers

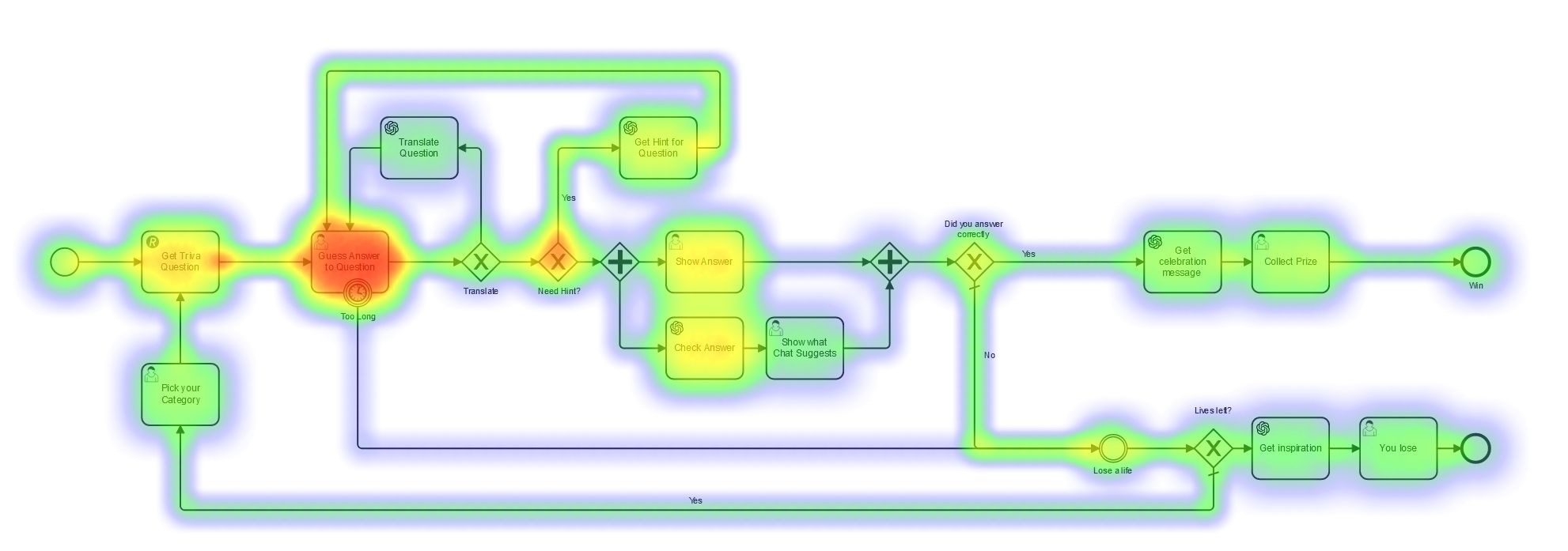

With Optimize, I can also check out the most common path through the final process.

A more detailed explanation of what’s possible in Optimize was recorded by my colleague Samantha, so if you’re interested in seeing how this heat map is created and what other kinds of reporting and altering can be achieved you should check her video here.

You can play the game yourself if you like, the code is available on GitHub and you’ll just need to follow the instructions in the readme to have it running in a few minutes.