In December, Camunda released Camunda 8.9-alpha2, and we’d like to share some of its highlights with you. For a list of all items included in the release, we encourage you to check out our release notes.

This alpha was all about connectors: enhancing them, creating new ones, and fine-tuning existing ones. Camunda 8.9.0‑alpha2 gives us MCP secure tool access, sturdier connectors, and a clean pattern for multi‑agent handoffs via A2A.

Let’s take a deeper look.

Welcoming A2A connectors to Camunda

The highlight of the alpha2 release is all around the inclusion of A2A connectors into Camunda 8.9-alpha2.

What is Agent2Agent (A2A)?

Agent-to-Agent, Agent2Agent,or A2A refers to the direct communication and collaboration between autonomous AI agents. This communication uses open standards (the A2A protocol) for interoperability, which allows disparate systems to share information, divide complex tasks, and work together.

A2A is a significant step for large-scale AI applications that go beyond single-agent frameworks. You can think of it a bit like an open messaging system for different AIs to collaborate.

How A2A works

With interoperability between agents, you get immediate value even if your agents aren’t in the same tech stack. For example, an agent built with LangChain can hand off to another agent on CrewAI and still have the proper context, input, and outputs. You can mix and match agent capabilities without rewriting everything or locking into a single vendor, getting immediate impact.

When agents collaborate, they share context, divide duties, and take on tasks that are best solved as a team. The result: fewer human handoffs, clear step-by-step ownership, and better outcomes. This is enhanced with task management providing guardrails for long-running, multi-element work.

With state management and observable progress, you get predictable SLAs, easier incident management, and crisper audit trails.

A2A also uses a standardized protocol: HTTPS/S and JSON for data exchange and state updates. This means that you can run through existing gateways, apply known security controls, and trust the transport. It’s secure, observable, and compatible with the tooling your platform and security teams already use.

Key benefits

There are several key benefits of implementing A2A including:

- Scalability: Helps manage vast networks of interacting AI agents.

- Efficiency: Agents can work in parallel, increasing speed and output.

- Flexibility: Users can combine agents from various sources for specialized functions.

Camunda and A2A

With our new A2A connectors, Camunda offers a reliable and observable way for a primary agent to delegate to a specialist, wait for the results, handle timeouts, and escalate when required. The A2A protocol and A2A Client connectors with Camunda provide that pattern in BPMN: discover capabilities, send a task, and receive results through a transport between agents that fits your network reality.

Essentially, with the A2A protocol, Camunda can now provide agents that communicate with each other directly as well as agents that request specific tasks from a set of tools—essentially, providing our customers with the best of both worlds.

In order to take advantage of A2A with Camunda, you will need to ensure a couple prerequisites are in place:

- Access to an A2A-compliant agent

- Its associated Agent Card URL

The Agent Card URL explains what the remote agent can do and how to communicate with it. Then Camunda dispatches a task message from the process and chooses how results should be returned.

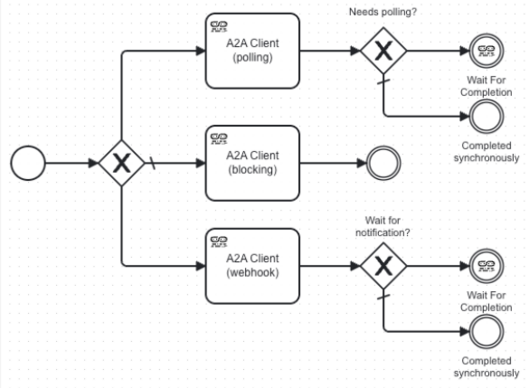

In 8.9.0‑alpha2, there are two client connectors. You’ll need to pick the one that best suits your requirements. Camunda supports both a webhook connector pattern as well the more traditional polling approach (ie, a job worker pulling work on an interval).

A2A Client (polling)

With the Camunda A2A Client Polling connector, Camunda repeatedly checks an external system for a result or a new event on a schedule. Consider something like every N minutes or seconds, ask if the AI job is complete yet. The connector polls for responses from asynchronous A2A tasks. You would usually pair the polling response retrieval method with the A2A Client connector.

This method is appropriate when the remote system cannot or will not provide a callback. In this pattern, Camunda initiates work via an outbound call or places a job on a queue and then periodically checks the status until the task is completed. This is a good fit for long-running specialist work—for example, complex RAG analysis or batch enrichment—where callbacks are blocked, unsupported, or unreliable.

As mentioned, Camunda starts the agent by making an outbound call. For example:

- HTTP/gRPC: POST /jobs to create work and receive a job ID.

- Messaging/queue: Publish a job message; the agent pulls and starts work.

- Object store trigger: Upload an input artifact; the agent watches the bucket and begins processing.

Camunda then polls a status endpoint, queue, or artifact location until completion, timeout, or error.

With this pattern, poll intervals and overall timeouts live in the BPMN model, so the process—not the agent—owns service levels. This means that the correlation is explicit; you pair your retry/backoff logic with a BPMN boundary timer that routes to a compensation path, so the primary agent never blocks.

Polling is the right choice when you can start the job outbound but can’t accept (or trust) callbacks. You kick off the work, then you check back—on your terms, within your time budget.

A2A Client (webhook)

Camunda also exposes an endpoint via the A2A Client webhook connector, and the external system calls when the AI job is complete or an event happens. Using this webhook makes the round‑trip event‑driven. You dispatch once, the agent posts results to your callback endpoint, and your process continues immediately on receipt. The webhook approach ensures that the agent is called only once.

Camunda runs this pattern in a fully event-driven way. Your orchestrated process dispatches the job once and includes a correlation key. The external system (or specialist agent) calls the webhook endpoint when the AI task completes or when a notable event occurs (eg, partial results, human-in-the-loop needed, final outcome). On callback receipt, Camunda immediately resumes the correct process instance, so latency comes down to network plus verification time.

With this approach, you eliminate polling noise and get a natural fit for elastic workloads. You are able to stop constant checking and let the system respond only when something happens. That cuts wasted compute and avoids traffic spikes during busy periods. It’s a great fit for time-sensitive steps—like fraud checks, real-time offers, or quick AI summaries—where you want the next action to start as soon as the result is ready. It also works well when your internal teams or vendors can send a secure callback to a preapproved address.

The webhook method is appropriate when your flow with latency-sensitive tasks should progress as soon as ready. It is also a good choice when you are building more real-time, event-driven agentic orchestration patterns. Or, when internal specialist agents or vendors are able to sign and deliver callbacks to an allowlisted endpoint.

Quick rules of thumb

When you’re choosing between webhook callbacks and polling, begin with the business case, derive technical goals, and select webhooks or polling to meet them.

Some key considerations can help point you in the right direction for your needs, as outlined below.

Use polling when

- The source system doesn’t support webhooks. This is common with legacy systems.

- There are security constraints. Your system is behind a strict firewall/VPN and cannot receive incoming traffic from the internet.

- You want/need to control the load. You determine when your system is ready to process more data (backpressure).

Use webhooks when

- Speed is critical. You need to know about a payment, a new user, or a system failure immediately.

- Scaling. Webhooks are generally more efficient for the provider, as they don’t have to handle thousands of “Any updates?” requests.

- You are connecting SaaS tools. Most modern platforms (eg, Stripe, GitHub, Slack, Shopify) are designed to work via webhooks.

Business impact of A2A agents

Camunda’s A2A support delivers measurable business value, such as:

- Clear accountability. The primary agent delegates. Camunda orchestrates timeouts, retries, and escalation. No “lost in the loop” tasks.

- Vendor portability. A consistent contract provides swapping specialist agents without rewriting process logic.

- Observability. The orchestration layer is the “single pane” for SLAs and exceptions, whether results arrive via pull or push.

MCP security advancements

This release includes security enhancements to our MCP connectors. The MCP Client now supports OAuth, API key, and custom header authentication, plus streamable HTTP transport. In practice, you can align agent tool calls with the same policies you use for humans and services, keep a zero-trust posture, and still give long-running tasks the responsive I/O they need for incremental outputs.

With these MCP updates:

- You can register tools once, attach a policy for who and what can call them, and get auditability without one-off reverse proxies.

- You have streamable HTTP removing a class of timeouts and chunking hacks for larger payloads and progressive responses.

- You get standard authorization modes that let platform teams reuse existing identity controls instead of inventing exceptions for agents.

If you model these calls in BPMN and run them in Zeebe, the new auth options keep identity symmetrical across job workers, human task apps, and agent tools. The payoff is long-term: consistent auth across agent tools and fewer production surprises.

From an architect’s view, this release introduces changes to MCP Client element templates and runtime configuration that require updates. You must update the MCP Client and MCP Remote Client connectors to use version 1 of the element templates.

Review the documentation for more information.

Connector enhancements

Camunda made some significant improvements to several connectors in this release to enhance usability, concurrency, and authorization. Here’s a summary of what we have improved with this alpha release.

Amazon Textract connector

We have enhanced the usability of our Amazon Textract connector with improved input field visibility as well as cleaner polling logic.

Azure Blob Storage connector

There are no more one-off SAS keys lingering in hard-to-find stores with the support of OAuth 2.0 and direct alignment with enterprise identity.

Email connector

We have added a useful feature for customers running local mail servers by adding support for “no authentication” mode for SMTP. This also simplifies your test environments and reduces configuration drift between development and production environments.

Virtual threads (Self-Managed)

We now provide more I/O concurrency with less thread contention by using virtual threads by default. This improves both performance and scalability. This allows the connector runtime to handle a larger number of concurrent jobs with lower resource consumption, particularly benefiting I/O-bound workloads typical in connector operations.

Closing

With our 8.9.0-alpha2 release, Camunda furthers our commitment and expands our functionality toward governed, observable, and secure enterprise automation. We have strengthened our connectors with additional functionality and authorization options. With the introduction of agent-to-agent collaboration, we now provide a structured and robust way for agents to work together.

Check out our release notes to see all the new features provided with Camunda 8.9.0-alpha2.

Start the discussion at forum.camunda.io