As your organization embraces AI, it is of the utmost importance that you are using best practices and the proper guardrails so that your orchestrated processes. In this blog, we will provide some suggestions and recommendations on how to best keep your AI agents on track when using Camunda for your process orchestration needs.

Let’s get started.

Why you need best practices and guardrails

As you know, AI agents are very powerful, but they often have a mind of their own. They need some guidance to keep them in check. As companies start to experiment with including AI agents in process automation, they can encounter risks without proper orchestration, governance, and transparency, like escalating costs, unclear value, and compliance risks.

Picture a claims processing team that just implemented an AI agent into their intake flow. As processing picks up, the agent starts classifying documents, drafting summaries, and nudging cases forward.

But the model behavior starts to drift, and your auditor asks for the decision trail for the three most recent claim denials. However, your team quickly realizes that they cannot answer simple questions, like what version of the prompt was run, what triggered agent escalation, or why retries were so high last Tuesday. As a result, you learn the hard way that powerful agents without guardrails create more questions than answers.

If agents are built without best practices or proper guardrails, they might multiply calls to external models, create inconsistent outcomes across teams, and even blur the line between assistive automation and operational decision making. You do not want to have AI chaos on the loose. That can lead to rising costs without clear value, inconsistent customer experiences, and compliance risk because no one can explain how work moved through the system.

Enterprises do not tolerate black boxes in core processes. They shouldn’t begin now.

Key phrases to understand

Before we get started, let’s make sure you’re familiar with some terms used in this blog post:

- Process orchestration. Process orchestration is the coordination of systems, people, and decisions across an end-to-end workflow using a standard like BPMN. It has explicit state, SLAs, and auditability baked into the process.

- Agentic orchestration. Agentic orchestration refers to the specific coordinations of AI agents, tools, and handoffs including constraints like escalations, timers, and human approvals.

- Enterprise agentic automation. Enterprise agentic automation (EAA) is where process and agentic orchestration meet for agents embedded as tasks. These tasks are inside a governed, stateful process, backed by versioned models and prompts, policy controls, observability, and a single source of truth for process execution.

A deterministic process is a process with expected inputs that produce a sequenced set of steps without ambiguity or randomness. The behavior is predictable, repeatable, and fully governed by predefined rules and logic.

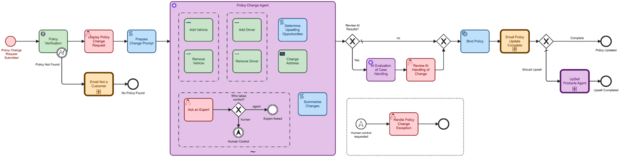

An example of a deterministic process might look something like this when modeled with Business Process Model and Notation (BPMN).

A dynamic process is a process whose path can change at runtime based on context, events, data, or decisions made by people or AI agents. Generally speaking, a dynamic process tends to be a sub-process within the scope of a deterministic process. Instead of following a fixed sequence, it adapts as conditions evolve, allowing new steps, exceptions, or outcomes to emerge while the process is running.

An example of a dynamic (and deterministic) process might look something like the one shown below. The areas in purple denote AI agents modeled for Camunda.

With Camunda, you have a single model from design to execution:

- Model in Modeler

- Connect systems using connectors

- Execute on Zeebe

- Monitor active and completed processes with Operate

- Review performance, SLAs, bottlenecks, and more with Optimize

Agents are treated like other elements on your BPMN diagram with the ability to see inputs, outputs, and deal with incident handling.

Plain and simple, AI agents should never run as “free agents.” They belong inside a stateful, end-to-end process that supplies the proper guardrails, like a process model built in Camunda, to keep them on track.

With Camunda, BPMN maps the flow, Decision Model and Notation (DMN) sets decision guardrails, connectors govern tool calls, and humans step in at the right gateways. The result is end-to-end visibility into cost, quality, and compliance.

When agents live inside orchestrated business processes, you can version prompts, roll back safely, escalate to a person, and prove to an auditor exactly what happened.

When they live outside processes, you get clever demos and fragile operations. The enterprise pattern is not to replace orchestration with agents, but to augment orchestration with agents.

Recommendations for AI agents

Let’s cover some best practices so you can get the most out of AI while maintaining the proper checks and balances.

Start with the process goal, then design the agent

One important thing to remember is your overall process goal. You do not want to start designing agents without keeping your final process goal in mind. For example, the process goal might be to onboard a new customer with an associated business goal of completing this onboarding in under five minutes.

So, you want to be sure to clarify your business goal before building an AI agent or picking an LLM. Some key things to consider before jumping in are the following:

- Understand the process goal and the business outcome you are optimizing.

- Identify where the process really needs dynamic behavior versus deterministic automation.

- Use BPMN and DMN to capture deterministic logic and decisions visually so that business and IT are aligned from the start.

Separate deterministic from dynamic logic

Essentially, the principle in this approach is to model your deterministic behavior as classic BPMN process steps with DMN decisions, where appropriate. Reserve AI agents for dynamic, reasoning-heavy tasks.

After you have this logic separated, you can begin to determine which sections or tasks in your process lend themselves to the dynamic patterns of an AI agent including:

- Messy, unordered input

- Unique data structures between service calls

- Multisystem integration for a single output

- Unpredictable procedure after each task result

- Looping task activations and planning loops

It is best to keep everything else, such as calculations, rule-based decisions, and fixed routing, in deterministic automation, not in the agent.

Keep your goals targeted and outcomes predictable

It is imperative to right-size your AI agent. You can accomplish this by keeping your goals precise and your outcomes clear. Define a specific goal of the agent and not the entire process. A nice way to do this is to treat the agent as a “process step with brains” that contributes to a larger orchestration.

Start by defining the goal the agent should pursue; for example, “collect all the data needed for a Know Your Customer (KYC) decision” or “summarize all the contract versions for this case in preparation for the case worker.” Be sure to limit the scope. By narrowing the goal, you can save money, reduce hallucinations, and increase the accuracy of your agent.

Know what is suited for an agent and what isn’t

Make sure to decide what your agent should accomplish so that you can clarify its goal for optimal results. Here’s an idea of what an agent should and should not do in your overall orchestrated process.

What an agent should do:

- Choose which tools to execute in the proper order to achieve its goal.

- Interpret tool results and assess whether more context is needed.

- Recontextualize existing data (summaries, explanations, restructuring content).

What an agent should not be selected to do:

- Create brand-new business/process data that has not been validated by deterministic rules or humans.

- Make final deterministic calculations or decisions—these should live in DMN or rule engines.

Leave the agent as quickly as possible

Once your agent has accomplished its goal (or failed), you want to make sure to hand the process back to deterministic flow as fast as possible. Use the agent to explore, plan, and prepare data, but then move back to the defined process.

This approach provides stronger governance and explainability to your agentic orchestration. It also helps to deliver more predictable costs as the agent usage is often the most expensive part of your process. Finally, it lends itself to easier testing and versioning of business rules.

Use your system prompt as a guardrail

Your system prompt, if written properly, can also be used as a guardrail for your agentic orchestration. It is all about how it is written and the clarity of the prompt.

The goal of the system prompt and the agent are separate. You must clarify the agent goal, or business outcome, and make sure that is distinct from the goal provided in your system prompt. You want your system prompt to provide:

- Statement of the agent’s objective

- Definition of the allowed tools and behaviors that can be used

- Explanation of failure modes, safety constraints, and escalation paths

There are a few key things that you will want to include in your system prompt:

- Clearly defined success conditions with instructions to prefer tools over internal reasoning whenever possible. In other words, “if a tool is provided, the agent must use it instead of guessing.”

- Explicit instructions that the agent is not to generate any new data unless required by a specific tool in the toolbox set.

- Instructions for how the agent is to behave if it cannot succeed. An example of this might be “if the required data is unavailable, stop and ask for human assistance.”

What to avoid in system prompts

You need to be concise and clear in your system prompts so there are some things you want to be sure to avoid:

- Overly detailed guidance. Don’t try to provide a script of the step-by-step actions the agent should take. If that is the goal, use a DMN table.

- Be sure to take advantage of the documentation and BPMN element descriptions to show how a tool should be used and don’t put that into your system prompt.

- Words like “by any means necessary.” This just invites unsafe behavior.

Teach your agent how to fail

AI agents are very adamant about success, and they will keep trying to achieve set goals. Because of this, it is important to design your agent with very explicit exit strategies. This means setting the proper boundaries for success and failure.

As previously mentioned, you need to define a clear set of goals, preferably only one or two, that include the exact conditions under which the agent should declare success and failure. The agent should know that when it declares failure, it has an exit plan. You can do this by modeling these boundaries explicitly in BPMN as alternative flows, error events, and human-in-the-loop tasks.

Set up failure as a first-class outcome. It needs to be a possible outcome of the agent. For example, instruct your agent to:

- Stop after a configurable number of retries or planning loops.

- Return a structured “cannot complete” result with reasons and context.

- Escalate to another agent or a human task as dictated by the process model.

This makes failures observable and auditable in Camunda Operate, prevents long-running agents from spinning indefinitely, and helps control costs.

Make agents part of a larger process with agentic BPMN

Your AI agents should not be the main orchestrator in processes; they should be participants in a greater orchestration, blending deterministic and dynamic steps into one executable model. You can think of it like treating each agent as a service task that calls an external platform or a dedicated “agent sub-process” with its own planning and reflection structure.

And don’t forget that agent tools are not always one-to-one with tasks. You can use most BPMN elements as tools; for example, a Call activity for complex, reusable processes like KYC checks or credit scoring. You can place gatekeeper tasks before certain agent tools, like money movement or system reconfiguration, so your agent can never call them directly.

With the full power of BPMN, you can use events and event sub-processes to trigger remediation, compensations, or alerts when agents are not performing their best. Sub-processes inside the agent’s scope can gather potentially helpful data, historical cases, and documents, while the agent plans its next action.

Because Camunda’s engine is stateful and event-driven, sub-processes can run asynchronously while still preserving full auditability and context—something most pure agent frameworks are unable to provide on their own.

As before, it is very important to document what each tool does and when and why the agent should use it, including restrictions, preconditions, and authentication or verification steps. An example might be something like this: “Use this tool to retrieve a user’s account history, but only after the user’s identity has been verified upstream.”

And always prefer existing process variables over AI-generated values wherever possible to keep agents grounded in trusted data.

Keep the orchestrator (Camunda) as the single source of truth for state and history. Agents stay stateless or short-state. Governance flows through BPMN/DMN, not through prompts alone.

Choose and tune LLMs for enterprise agentic automation

Model your process and the agent scope before selecting the LLM to use in that model. When deciding on the proper LLM for your AI agent, you may want to start with a generally capable model like Claude Sonnet 4.x or a comparable tier. You can start here and then move to more expensive, complex models if it’s necessary for your agent.

You will want to consider how to define elements of your AI agent as it relates to the LLLM:

- Agent goal

- Maximum tokens

- User prompts

- Responses

- Memory

Taking the recommendations from earlier, if you narrow your agents’ goals and loops, you will reduce token usage, which helps to control costs. The faster you can get out of the agent and back to the deterministic process, you can optimize for costs and accuracy.

You can also take advantage of Retrieval-Augmented Generation (RAG) for domain-specific knowledge instead of prompting your agent with the entire knowledge base will also minimize your costs.

Configure your user prompts

And don’t forget your user prompts. These play a very important role and should be treated as dynamic, per-instance input that can include relative documents and process context that might help the agent achieve its goal.

You also want to consider agent response formats so that they can be properly utilized by downstream components. This may also help to direct you to an LLM, since not all LLMs can reliably respond with properly formatted JSON. If not, you can add subsequent steps that can convert the response to JSON, but you will want to take this into consideration.

Use agent memory primarily for short-term reasoning and then rely on Camunda’s durable process state and external stores for long-term memory and compliance needs.

Patterns from real-world use cases

Let’s look at a few examples of how applying these recommendations can be implemented for the best possible outcomes.

Royalties and financial entitlements

- The goal: Ensure an artist receives all royalties they are entitled to.

- Agent role: When a request comes in, the agent gathers all required information across messy, fragmented systems.

- Guardrail: Once data is complete, hand off to DMN and deterministic flows for actual payout calculation and compliance checks.

KYC and onboarding checks

- The goal: Complete KYC assessment while reducing manual review.

- Agent role: Orchestrate data collection, document interpretation, and risk summarization.

- Guardrail: Final pass/fail decision remains in a deterministic KYC decision model, with human-in-the-loop for edge cases.

Document extraction and summarization

- The goal: Extract and summarize key points from complex customer documents.

- Agent role: Identify document types, extract relevant passages, produce a structured summary.

- Guardrail: Process orchestration ensures sensitive information is masked, outputs are logged, and risky actions are kept out of the agent’s reach.

Incident resolution

- The goal: Resolve worker incidents in the field as quickly as possible.

- Agent role: When an incident occurs, an agent attempts first-level resolution by collecting details, querying systems, and suggesting next steps.

- Guardrail: If the agent cannot resolve safely, the process routes to human experts with full context and an auditable trail.

Practical guardrails for agentic orchestration checklist

Let’s review a quick check list on the things to remember when implementing AI agents with Camunda:

- Scope: Is the agent’s goal narrow, clearly defined, and embedded in a larger process?

- Logic split: Is deterministic logic modeled in BPMN/DMN, with agents focusing on dynamic reasoning only?

- Prompt: Does the system prompt clearly define success, failure, tool usage, and forbidden behaviors?

- Exit strategy: Have you modeled explicit success, failure, and escalation paths in the process?

- Tools: Are tools documented, constrained, and wrapped in safe BPMN patterns like gatekeepers and Call Activities?

- State and audit: Are long-running agents backed by stateful process execution and independent audit trails?

- Governance: Do you have versioning, migration, and monitoring in place for agents, prompts, and tools?

This handy infographic documents this checklist.

Unlocking enterprise agentic automation safely

Agents shine when implemented to address messy, exception-heavy work. The win comes when you blend intelligence with control—agentic orchestration inside an orchestrated process—not when you replace governance with black-box AI.

Remember to start small. For example, model an end-to-end business process in Camunda, introduce a narrowly scoped agent with strong guardrails, and expand as evidence accumulates.

Taking this approach provides value to your organization including:

- Cost: Short agent loops and early handoffs cut token spend.

- Risk: Deterministic decisions and explicit escalations reduce compliance exposure.

- Speed: Reusable subprocesses and connectors accelerate delivery without losing control.

- Clarity: Operate and Optimize make agent behavior observable and improvable.

Read more on why AI belongs in your process orchestration strategy.

Start the discussion at forum.camunda.io