Kafka and Camunda

In this post I’m going to talk through the best and easiest way to integrate Camunda with Kafka. Specifically using the two cloud services that make these technologies easily available, Camunda SaaS and Confluent cloud. I’ll be using a full code example that you can follow and run yourself. But first – I want to discuss a question I’ve gotten a lot in the past. Why would someone use both Camunda and Kafka in their architecture? (Feel free to skip ahead if you already know 🙂 )

Camunda is used as a component to orchestrate distributed systems. Fundamentally we are deciding what needs to run and when to run it, which can sometimes sound a lot like what Kafka does. Kafka is after all an event streaming platform that also aims to trigger systems to do some work. We’ve found over the years that Kafka and Camunda are very often used in conjunction, because despite having very similar sounding goals, the focus of both technologies are very different. It comes down to decision making. Camunda is responsible for deciding what should happen, and Kafka is a facilitator of decisions made by other components. Kafka doesn’t have the ability to assess a complete context and make decisions by itself.

What this means is that when we see Camunda and Kafka being used together, Camunda is making decisions about what should happen while Kafka is used to expedite that decision to a distributed system.

For this to work, Camunda needs to send events to Kafka and also respond to events that have been sent to Kafka from other systems.

It’s now easier than ever to use Camunda and Kafka together thanks to our built-in inbound and outbound Kafka Connectors.

- The outbound Kafka Producer Connector sends a message to a Kafka topic

- The inbound Kafka Consumer Connector can do a couple of things:

- When attached to a BPMN Start Event, it triggers a process instance to start in response to a message that’s sent to a specific Kafka topic

- When attached to an Intermediate Catch Event, it can be used to have a process instance wait until a message is sent to a specific Kafka topic

Details of the example

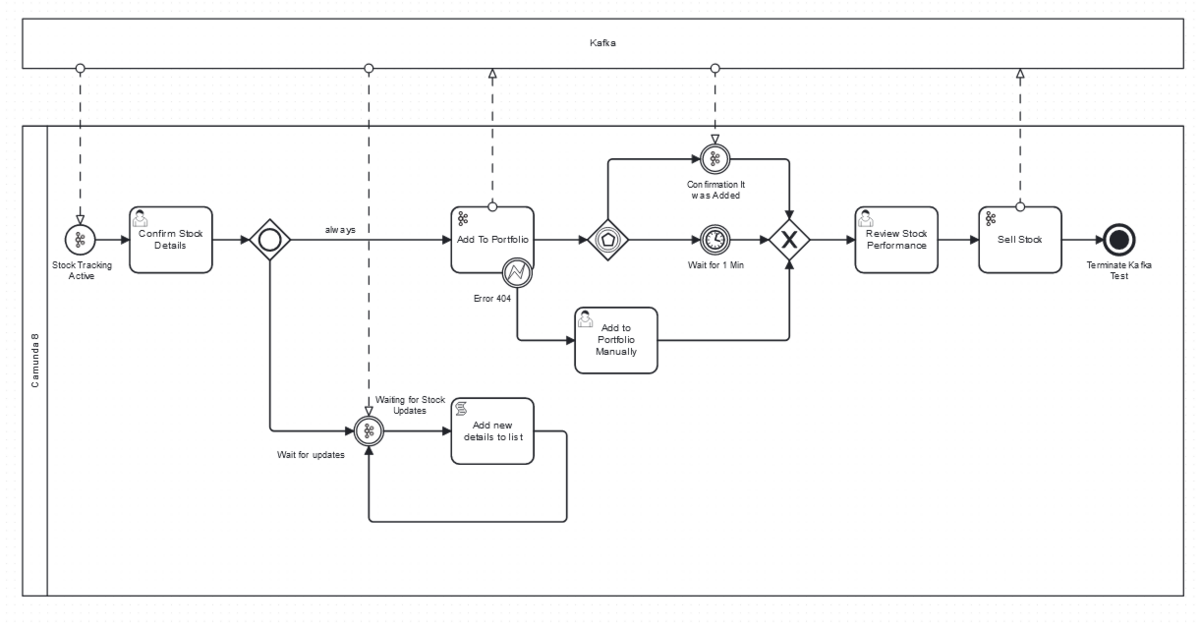

In this sudo-financial example, when a message arrives to the NewDetails topic, it’s going to trigger our “Stock Tracking Process.” I know nothing about stocks, but I’ve watched a documentary about the 1926 Black Thursday market crash, so I think I get the basic idea.

What I need to do is to create a Camunda process with a start event that can be triggered by a message that comes in from this topic. I want to make sure I add that stock to my portfolio. This is done by sending a message about the Portfolio topic. While all this is happening, I want to be able to get any updates for the stock as they come in, and finally, I want to be able to sell the stock when I’m done with it by sending another message.

Credentials and account creation

You’ll need a Kafka instance setup (the easiest place to do that is confluent.cloud) and you’ll need a Camunda Cluster (the easiest place to do that is Camunda SaaS). Then the fun can start.

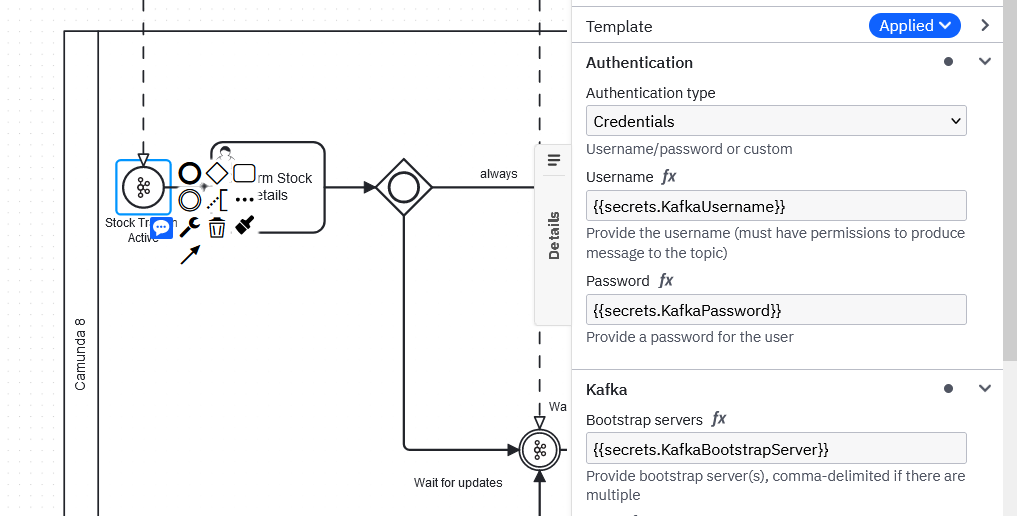

Connecting the two systems requires authentication and from your Kafka instance you’ll need the following.

- Username – You’ll get this from creating an API Key for your Kafka instance

- Password – Same as the username, it’s part of the API Key creation

- Bootstrap Servers – After you create a Kafka cluster you’ll find this in the cluster settings.

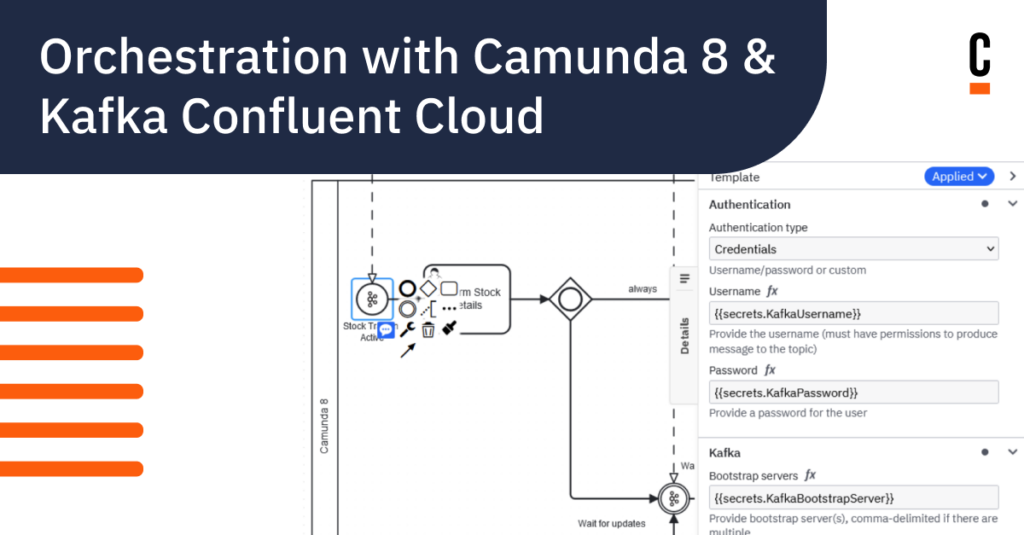

⚠ It’s important to know that it’s best practice not to simply copy and paste this sensitive information directly into the model but rather to create a secret for each of these in your Camunda cluster. Then you can reference it by typing {{secret.x}} where x is the name of the key you want to access.

Now you’re ready to upload and deploy the process.

Starting a process from a Kafka message

In this Stock Tracking Process, the start event uses a Camunda Kafka Consumer Connector. It’s set up to connect to a Kafka instance by adding the credentials created earlier.

It also has NewDetails in the Topic field to ensure it’s looking for the messages in the right place.

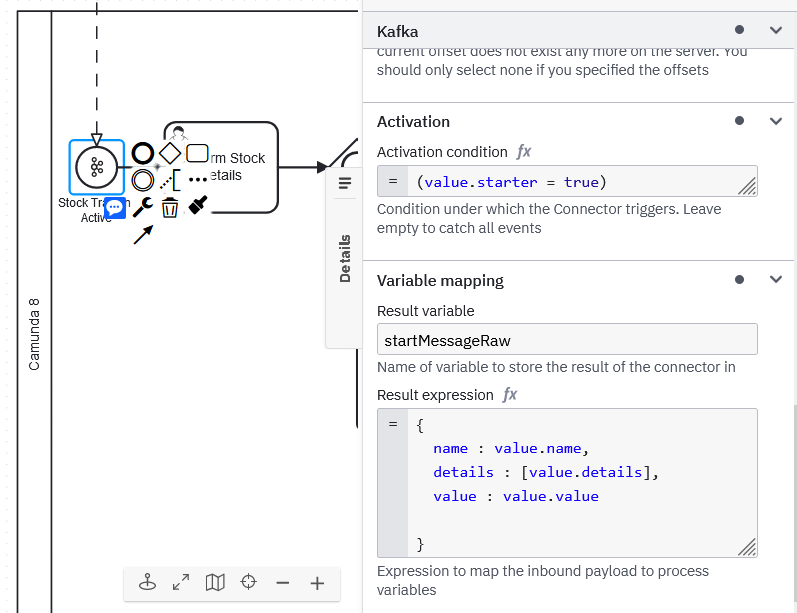

Once a message comes in, two important things happen. The first is that we check that the message is about a new stock, and the second is to parse the response and create process variables.

Let’s say a message is created in the NewDetails topic with a payload that looks like this:

{

"name" : "Niall",

"details" : "Camunda",

"value" : 1000000,

"newStock" : true

}We make sure that we only start the process if newStock is true by adding a start condition to the message.

Accessing the payload of the message is done by first accessing the value variable and then specifying the variable you’re trying to access. In the screenshot you can see that to create a process variable called name, I need to get the contents of value.name. Just to make things even more complicated, I also decided to have a variable called value, so to assign it as a process variable, you’d need to write: value : value.value (for more fun word play, try this Buffalo tangent).

To test it, create a message for the newDetails topic in Kafka with the specified payload. You should see an instance started in Operate.

Send a message to a Kafka topic with Camunda

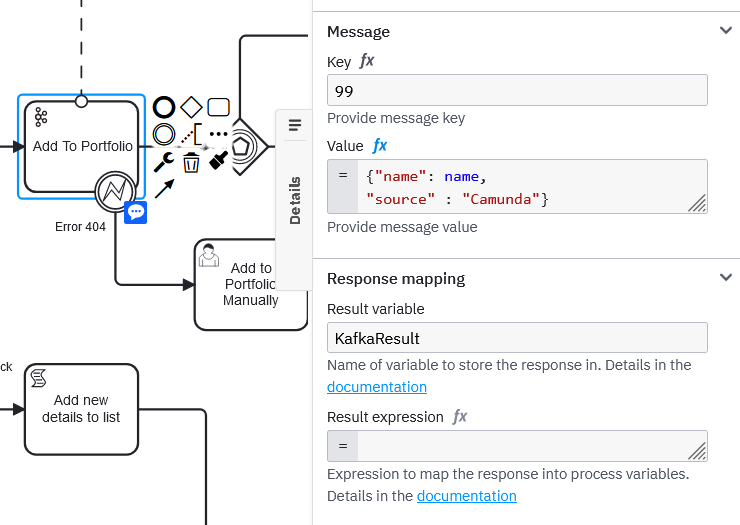

The next part of the process involves a user task, but the next part that is relevant to Kafka is where we send a message to a Kafka topic. As before, we add the authorization details and then we specify the topic name. The difference here is that we’re going to be adding a payload for the message and assigning the result back to a process variable.

In this example the payload will contain a variable name which will be given the value of the process variable also called name. The other variable is source and is hardcoded as Camunda.

I’ve also added KafkaResult to the Result variable field. This means that a process variable of that name will be created that contains the response from the Kafka. Which is handy for debugging. You can see that variable and all others in runtime using Operate.

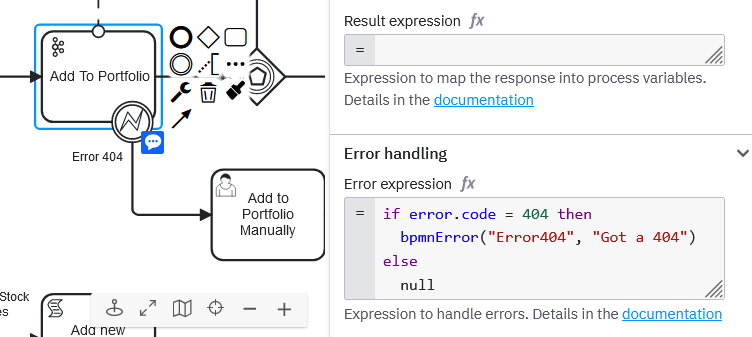

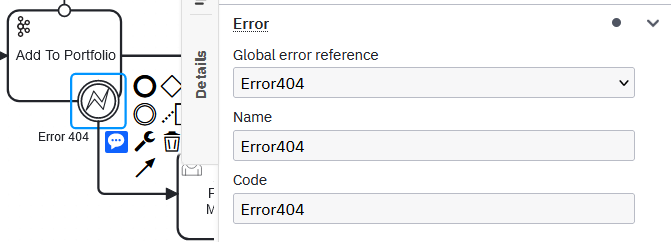

The final thing I’ve added here is a little error handling. You might have noticed the error boundary event attached to the task, well we can trigger that based on the response from the Connector.

In the Error Handling section, I’ve used FEEL to throw an error called Error404 when I get that error code from Kafka.

The error event itself is configured to listen to that same error code and catch that error to continue to process a user task.

Now a 404 isn’t going to stop your process! Fun!

Other interesting parts of the process

This example also has some other fun features to explore and learn about. Inbound and outbound connectors are probably enough of a grounding to understand everything else.

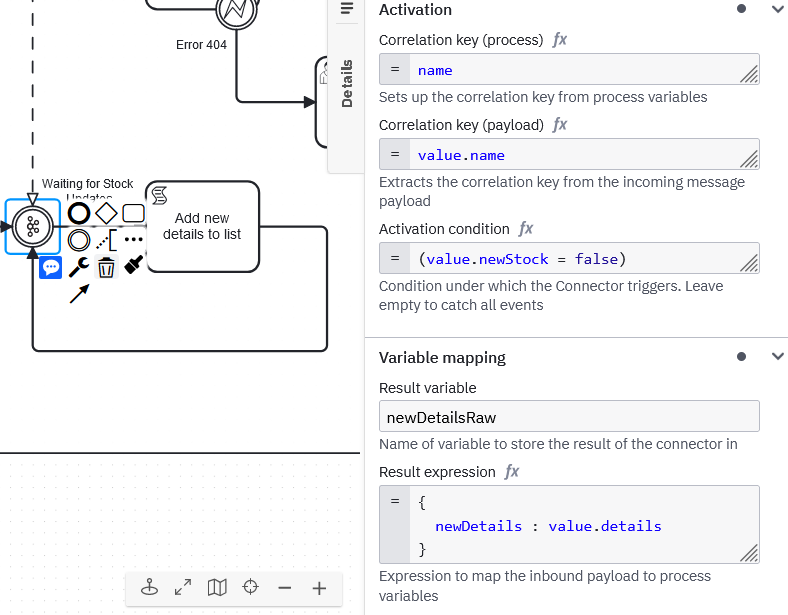

There’s a section that is waiting for updates for an existing stock and stores them in a list.

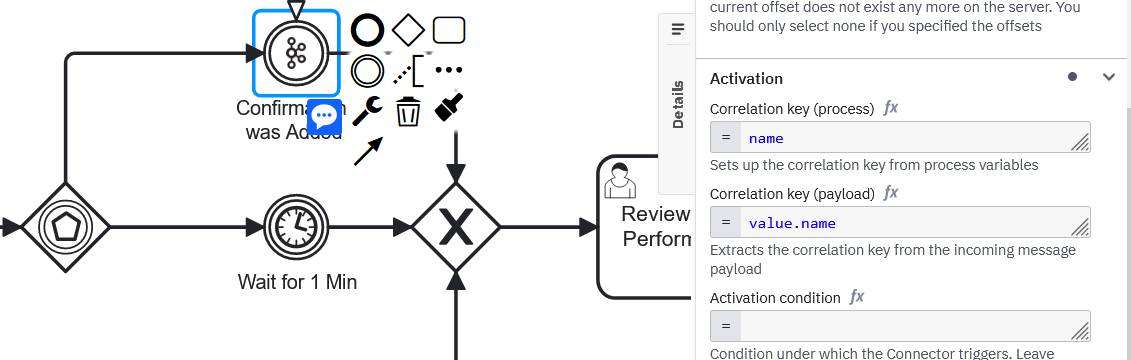

There’s also a nice little pattern where if a message from Kafka doesn’t come in, you won’t wait forever.

.

Other perspectives on Kafka & Camunda

In this post I’ve dealt a lot with the how, but for those interested in the why – we earlier released a great post from Amy on the benefits of event-driven process orchestration. In this post she discusses some of the considerations and advantages of adding both of these components to your architecture.

Together these posts can help you not only understand if the Kafka-Camunda combo is what you’re looking for but also how you might go about implementing it.

Want to try it out today? Get started with a free Camunda trial here.

Start the discussion at forum.camunda.io